Kudu Sink¶

Download connector Kudu Connector 1.2 for Kafka Kudu Connector 1.1 for Kafka

A Connector and Sink to write events from Kafka to Kudu. The connector takes the value from the Kafka Connect SinkRecords and inserts a new entry to Kudu.

Prerequisites¶

- Apache Kafka 0.11.x of above

- Kafka Connect 0.11.x or above

- Kudu 0.8 or above

- Java 1.8

Features¶

- The KCQL routing querying - Kafka topic payload field selection is supported, allowing you to select fields written to Kudu

- Error policies for handling failures

- Auto creation of tables

- Auto evolution of tables.

KCQL Support¶

{ INSERT | UPSERT } INTO kudu_table SELECT { FIELDS, ... }

FROM kafka_topic_name

[AUTOCREATE]

[DISTRIBUTE BY FIELD, ... INTO NBR_OF_BUCKETS BUCKETS]

[AUTOEVOLVE]

Tip

You can specify multiple KCQL statements separated by ; to have a the connector sink multiple topics.

The Kudu sink supports KCQL, Kafka Connect Query Language. The following support KCQL is available:

- Field selection

- Target table selection

- Insert and upset modes

- Auto creation of tables

- Auto evolution of tables.

Kudu at present doesn’t have a concept of databases like impala. If you want to insert into Impala you need to set the target table accordingly:

impala:database.impala_table

Example:

-- Insert mode, select all fields from topicA and write to tableA

INSERT INTO tableA SELECT * FROM topicA

-- Insert mode, select 3 fields and rename from topicB and write to tableB

INSERT INTO tableB SELECT x AS a, y AS b and z AS c FROM topicB

-- Upsert mode, select all fields from topicC, auto create tableC and auto evolve, use field1 and field2 as the primary keys

UPSERT INTO tableC SELECT * FROM topicC AUTOCREATE DISTRIBUTEBY field1, field2 INTO 10 BUCKETS AUTOEVOLVE

Write Modes¶

Insert Mode¶

Insert is the default write mode of the sink.

Kafka currently can provide exactly delivery semantics, however to ensure no errors are produced if unique constraints have been implemented on the target tables, the sink can run in UPSERT mode. If the error policy has been set to NOOP then the Sink will discard the error and continue to process, however, it currently makes no attempt to distinguish violation of integrity constraints from other exceptions such as casting issues.

Upsert Mode¶

The Sink supports Kudu upserts which replaces the existing row if a match is found on the primary keys. Kafka can provide exaclty once delivery semantics and order is a guaranteed within partitions. This mode will, if the same record is delivered twice to the sink, result in an idempotent write. If records are delivered with the same field or group of fields that are used as the primary key on the target table, but different values, the existing record in the target table will be updated.

Since records are delivered in the order they were written per partition. The write is idempotent on failure or restart. Redelivery produces the same result.

Auto Create¶

If you set AutoCreate, the sink will use the schema attached to the topic to create a table in Kudu. The primary

keys are set by the PK keyword. The field values will be concatenated and separated

by a -. If no fields are set the topic name, partition and message offset are used.

Kudu has a number of partition strategies. The sink only supports the HASH

partition strategy, you can control which fields are used to hash and the number of buckets which should be created.

This behaviour is controlled via the DISTRIBUTEBY clause.

DISTRIBUTE BY id INTO 10 BUCKETS

Note

Tables that are created are not visible to Impala. You must map them in Impala yourself.

Auto Evolution¶

The Sink supports auto evolution of tables for each topic. This mapping is set in the connect.kudu.kcql option.

When set the Sink will identify new schemas for each topic based on the schema version from the Schema registry. New columns

will be identified and an alter table DDL statement issued against Kudu.

Note

Schema registry support is required for this feature.

Schema evolution can occur upstream, for example new fields added or change in data type in the schema of the topic.

Upstream changes must follow the schema evolution rules laid out in the Schema Registry. This Sink only supports BACKWARD and FULLY compatible schemas. If new fields are added the Sink will attempt to perform a ALTER table DDL statement against the target table to add columns. All columns added to the target table are set as nullable.

Fields cannot be deleted upstream. Fields should be of AVRO union type [null, <dataType>] with a default set. This allows the Sink to either retrieve the default value or null. The Sink is not aware that the field has been deleted as a value is always supplied to it.

If a upstream field is removed and the topic is not following the Schema Registry’s evolution rules, i.e. not full or backwards compatible, any errors will default to the error policy.

Note

The Sink will never remove columns on the target table or attempt to change data types.

Error Polices¶

Landoop sink connectors support error polices. These error polices allow you to control the behaviour of the sink if it encounters an error when writing records to the target system. Since Kafka retains the records, subject to the configured retention policy of the topic, the sink can ignore the error, fail the connector or attempt redelivery.

Throw

Any error on write to the target system will be propagated up and processing is stopped. This is the default behavior.

Noop

Any error on write to the target database is ignored and processing continues.

Warning

This can lead to missed errors if you don’t have adequate monitoring. Data is not lost as it’s still in Kafka subject to Kafka’s retention policy. The sink currently does not distinguish between integrity constraint violations and or other exceptions thrown by any drivers or target system.

Retry

Any error on write to the target system causes the RetryIterable exception to be thrown. This causes the Kafka Connect framework to pause and replay the message. Offsets are not committed. For example, if the table is offline it will cause a write failure, the message can be replayed. With the Retry policy, the issue can be fixed without stopping the sink.

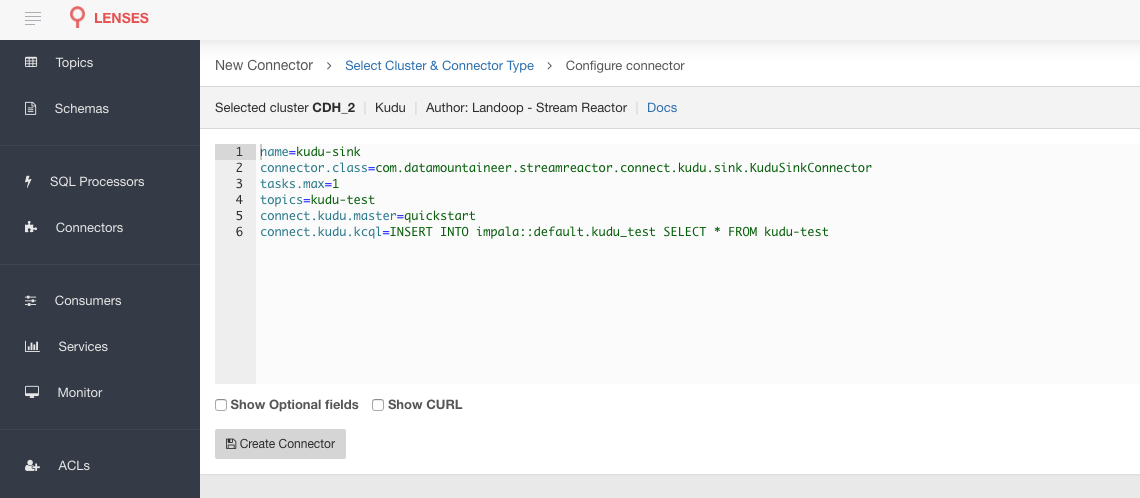

Lenses QuickStart¶

The easiest way to try out this is using Lenses Box the pre-configured docker, that comes with this connector pre-installed. You would need to Connectors –> New Connector –> Sink –> Kudu and paste your configuration

Kudu Setup¶

Download and check Kudu QuickStart VM starts up (VM password is demo).

curl -s https://raw.githubusercontent.com/cloudera/kudu-examples/master/demo-vm-setup/bootstrap.sh | bash

Kudu Table¶

Lets create a table in Kudu via Impala. The Sink does support auto creation of tables but they are not sync’d yet with Impala.

#demo/demo

ssh demo@quickstart -t impala-shell

CREATE TABLE default.kudu_test (id INT,random_field STRING, PRIMARY KEY(id))

PARTITION BY HASH PARTITIONS 16

STORED AS KUDU

Note

The Sink will fail to start if the tables matching the topics do not already exist and the KQL statement is not in auto create mode.

When creating a new Kudu table using Impala, you can create the table as an internal table or an external table.

Internal¶

An internal table is managed by Impala, and when you drop it from Impala, the data and the table truly are dropped. When you create a new table using Impala, it is generally a internal table.

External¶

An external table is not managed by Impala and dropping such a table does not drop the table from its source location. Instead, it only removes the mapping between Impala and Kudu.

See the Impala documentation for more information about internal and external tables. Here’s an example:

CREATE EXTERNAL TABLE default.kudu_test

STORED AS KUDU

TBLPROPERTIES ('kudu.table_name'='kudu_test');

Impala Databases and Kudu¶

Every Impala table is contained within a namespace called a database. The default database is called default, and users may create and drop additional databases as desired.

Note

When a managed Kudu table is created from within Impala, the corresponding Kudu table will be named impala::my_database.table_name

Tables created by the sink are not automatically visible to Impala. You must map the table in Impala.

CREATE EXTERNAL TABLE my_mapping_table

STORED AS KUDU

TBLPROPERTIES (

'kudu.table_name' = 'my_kudu_table'

);

Sink Connector QuickStart¶

Start Kafka Connect in distributed mode (see install).

In this mode a Rest Endpoint on port 8083 is exposed to accept connector configurations.

We developed Command Line Interface to make interacting with the Connect Rest API easier. The CLI can be found in the Stream Reactor download under

the bin folder. Alternatively the Jar can be pulled from our GitHub

releases page.

Installing the Connector¶

Connect, in production should be run in distributed mode

- Install and configure a Kafka Connect cluster

- Create a folder on each server called

plugins/lib - Copy into the above folder the required connector jars from the stream reactor download

- Edit

connect-avro-distributed.propertiesin theetc/schema-registryfolder and uncomment theplugin.pathoption. Set it to the root directory i.e. plugins you deployed the stream reactor connector jars in step 2. - Start Connect,

bin/connect-distributed etc/schema-registry/connect-avro-distributed.properties

Connect Workers are long running processes so set an init.d or systemctl service accordingly.

Starting the Connector (Distributed)¶

Download, and install Stream Reactor. Follow the instructions here if you haven’t already done so. All paths in the quickstart are based on the location you installed the Stream Reactor.

Once the Connect has started we can now use the kafka-connect-tools cli to post in our distributed properties file for Kudu. For the CLI to work including when using the dockers you will have to set the following environment variable to point the Kafka Connect Rest API.

export KAFKA_CONNECT_REST="http://myserver:myport"

➜ bin/connect-cli create kudu-sink < conf/kudu-sink.properties

name=kudu-sink

connector.class=com.datamountaineer.streamreactor.connect.kudu.sink.KuduSinkConnector

tasks.max=1

topics=kudu-test

connect.kudu.master=quickstart

connect.kudu.kcql=INSERT INTO impala::default.kudu_test SELECT * FROM kudu-test

If you switch back to the terminal you started Kafka Connect in, you should see the Elastic Search Sink being accepted and the task starting.

We can use the CLI to check if the connector is up but you should be able to see this in logs as well.

#check for running connectors with the CLI

➜ bin/connect-cli ps

kudu-sink

INFO

__ __

/ / ____ _____ ____/ /___ ____ ____

/ / / __ `/ __ \/ __ / __ \/ __ \/ __ \

/ /___/ /_/ / / / / /_/ / /_/ / /_/ / /_/ /

/_____/\__,_/_/ /_/\__,_/\____/\____/ .___/

/_/

__ __ __ _____ _ __

/ //_/_ ______/ /_ __/ ___/(_)___ / /__

/ ,< / / / / __ / / / /\__ \/ / __ \/ //_/

/ /| / /_/ / /_/ / /_/ /___/ / / / / / ,<

/_/ |_\__,_/\__,_/\__,_//____/_/_/ /_/_/|_|

by Andrew Stevenson

(com.datamountaineer.streamreactor.connect.kudu.KuduSinkTask:37)

INFO KuduSinkConfig values:

connect.kudu.master = quickstart

(com.datamountaineer.streamreactor.connect.kudu.KuduSinkConfig:165)

INFO Connecting to Kudu Master at quickstart (com.datamountaineer.streamreactor.connect.kudu.KuduWriter$:33)

INFO Initialising Kudu writer (com.datamountaineer.streamreactor.connect.kudu.KuduWriter:40)

INFO Assigned topics (com.datamountaineer.streamreactor.connect.kudu.KuduWriter:42)

INFO Sink task org.apache.kafka.connect.runtime.WorkerSinkTask@b60ba7b finished initialization and start (org.apache.kafka.connect.runtime.WorkerSinkTask:155)

Test Records¶

Tip

If your input topic doesn’t match the target use Lenses SQL to transform in real-time the input, no Java or Scala required!

Now we need to put some records it to the kudu-test topics. We can use the kafka-avro-console-producer to do this.

Start the producer and pass in a schema to register in the Schema Registry. The schema has a id field of type int

and a random_field of type string.

bin/kafka-avro-console-producer \

--broker-list localhost:9092 --topic kudu-test \

--property value.schema='{"type":"record","name":"myrecord","fields":[{"name":"id","type":"int"},

{"name":"random_field", "type": "string"}]}'

Now the producer is waiting for input. Paste in the following, one record at a time:

{"id": 999, "random_field": "foo"}

{"id": 888, "random_field": "bar"}

Check for records in Kudu¶

Now check the logs of the connector you should see this:

INFO Sink task org.apache.kafka.connect.runtime.WorkerSinkTask@68496440 finished initialization and start (org.apache.kafka.connect.runtime.WorkerSinkTask:155)

INFO Written 2 for kudu_test (com.datamountaineer.streamreactor.connect.kudu.KuduWriter:90)

In Kudu:

#demo/demo

ssh demo@quickstart -t impala-shell

SELECT * FROM kudu_test;

Query: select * FROM kudu_test

+-----+--------------+

| id | random_field |

+-----+--------------+

| 888 | bar |

| 999 | foo |

+-----+--------------+

Fetched 2 row(s) in 0.14s

Configurations¶

The Kafka Connect framework requires the following in addition to any connectors specific configurations:

| Config | Description | Type | Value |

|---|---|---|---|

name |

Name of the connector | string | This must be unique across the Connect cluster |

topics |

The topics to sink.

The connector will check this matchs the KCQL statement

|

string | |

tasks.max |

The number of tasks to scale output | int | 1 |

connector.class |

Name of the connector class | string | com.datamountaineer.streamreactor.connect.kudu.sink.KuduSinkConnector |

Connector Configurations¶

| Config | Description | Type |

|---|---|---|

connect.kudu.master |

Specifies a Kudu server | string |

connect.kudu.kcql |

Kafka connect query language expression | string |

Optional Configurations¶

| Config | Description | Type | |

|---|---|---|---|

connect.kudu.write.flush.mode |

Flush mode on write.

1. SYNC - flush each sink record. Batching is disabled.

2. BATCH_BACKGROUND - flush batch of sink records in background thread.

3. BATCH_SYNC - flush batch of sink records

|

string | SYNC |

connect.kudu.mutation.buffer.space |

Kudu Session mutation buffer space (bytes) | 10000 | |

connect.kudu.schema.registry.url |

The url for the Schema registry. This is used to | retrieve the latest schema for table creation | string | http://localhost:8081 |

connect.kudu.error.policy |

Specifies the action to be

taken if an error occurs while inserting the data.

There are three available options, NOOP, the error

is swallowed, THROW, the error is allowed

to propagate and retry.

For RETRY the Kafka message is redelivered up

to a maximum number of times specified by the

connect.kudu.max.retries option |

string | THROW |

connect.kudu.max.retries |

The maximum number of times a message

is retried. Only valid when the

connect.kudu.error.policy is set to RETRY |

string | 10 |

connect.kudu.retry.interval |

The interval, in milliseconds between retries,

if the sink is using

connect.kudu.error.policy set to RETRY |

string | 60000 |

connect.progress.enabled |

Enables the output for how many

records have been processed

|

boolean | false |

Example¶

name=kudu-sink

connector.class=com.datamountaineer.streamreactor.connect.kudu.sink.KuduSinkConnector

tasks.max=1

topics=kudu-test

connect.kudu.master=quickstart

connect.kudu.schema.registry.url=http://myhost:8081

connect.kudu.kcql=INSERT INTO impala::default.kudu_test SELECT * FROM kudu_test AUTOCREATE DISTRIBUTEBY id INTO 5 BUCKETS

Data Type Mappings¶

| Connect Type | Kudu Data Type |

|---|---|

| INT8 | INT8 |

| INT16 | INT16 |

| INT32 | INT32 |

| INT64 | INT64 |

| BOOLEAN | BOOLEAN |

| FLOAT32 | FLOAT |

| FLOAT64 | FLOAT |

| BYTES | BINARY |

Kubernetes¶

Helm Charts are provided at our repo, add the repo to your Helm instance and install. We recommend using the Landscaper to manage Helm Values since typically each Connector instance has its own deployment.

Add the Helm charts to your Helm instance:

helm repo add landoop https://landoop.github.io/kafka-helm-charts/

TroubleShooting¶

Please review the FAQs and join our slack channel