CoAP Sink¶

Download connector CoAP Connector 1.2 for Kafka CoAP Connector 1.1 for Kafka

This CoAP Sink connector allows you to write messages from Kafka to CoAP.

Prerequisites¶

- Apache Kafka 0.11.x of above

- Kafka Connect 0.11.x or above

- Java 1.8

Features¶

- DTLS secure clients

- The KCQL routing querying - Topic to measure mapping and Field selection

- Error policies for handling failures.

KCQL Support¶

INSERT INTO coap_resource SELECT { FIELD, ... } FROM kafka_topic

Tip

You can specify multiple KCQL statements separated by ; to have a the connector sink multiple topics.

The CoAP sink supports KCQL, Kafka Connect Query Language. The following support KCQL is available:

- Field selection

- Selection of target index.

Kafka messages are stored as JSON strings in CoAP.

Example:

-- Insert mode, select all fields from topicA and write to resourceA

INSERT INTO resourceA SELECT * FROM topicA

-- Insert mode, select 3 fields and rename from topicB and write to resourceA

INSERT INTO resourceA SELECT x AS a, y AS b and z AS c FROM topicB

This is set in the connect.coap.kcql option.

DTLS Secure connections¶

The Connector uses the Californium Java API and for secure connections use the Scandium security module provided by Californium. Scandium (Sc) is an implementation of Datagram Transport Layer Security 1.2, also known as RFC 6347.

Please refer to the Californium certification repo page for more information.

The connector supports:

- SSL trust and key stores

- Public/Private PEM keys and PSK client/identity

- PSK Client Identity

The Sink will attempt secure connections in the following order if the URI schema of connect.coap.uri set to secure, i.e.``coaps``.

If connect.coap.username is set PSK client identity authentication is used, if additional connect.coap.private.key.path

Public/Private keys authentication will also be attempted. Otherwise SSL trust and key store.

openssl pkcs8 -in privatekey.pem -topk8 -nocrypt -out privatekey-pkcs8.pem

Only cipher suites TLS_PSK_WITH_AES_128_CCM_8 and TLS_PSK_WITH_AES_128_CBC_SHA256 are currently supported.

Warning

The keystore, truststore, public and private files must be available on the local disk of the worker task.

Loading specific certificates can be achieved by providing a comma separated list for the connect.coap.certs configuration option.

The certificate chain can be set by the connect.coap.cert.chain.key configuration option.

Error Polices¶

Landoop sink connectors support error polices. These error polices allow you to control the behaviour of the sink if it encounters an error when writing records to the target system. Since Kafka retains the records, subject to the configured retention policy of the topic, the sink can ignore the error, fail the connector or attempt redelivery.

Throw

Any error on write to the target system will be propagated up and processing is stopped. This is the default behavior.

Noop

Any error on write to the target database is ignored and processing continues.

Warning

This can lead to missed errors if you don’t have adequate monitoring. Data is not lost as it’s still in Kafka subject to Kafka’s retention policy. The sink currently does not distinguish between integrity constraint violations and or other exceptions thrown by any drivers or target systems.

Retry

Any error on write to the target system causes the RetryIterable exception to be thrown. This causes the Kafka Connect framework to pause and replay the message. Offsets are not committed. For example, if the table is offline it will cause a write failure, the message can be replayed. With the Retry policy, the issue can be fixed without stopping the sink.

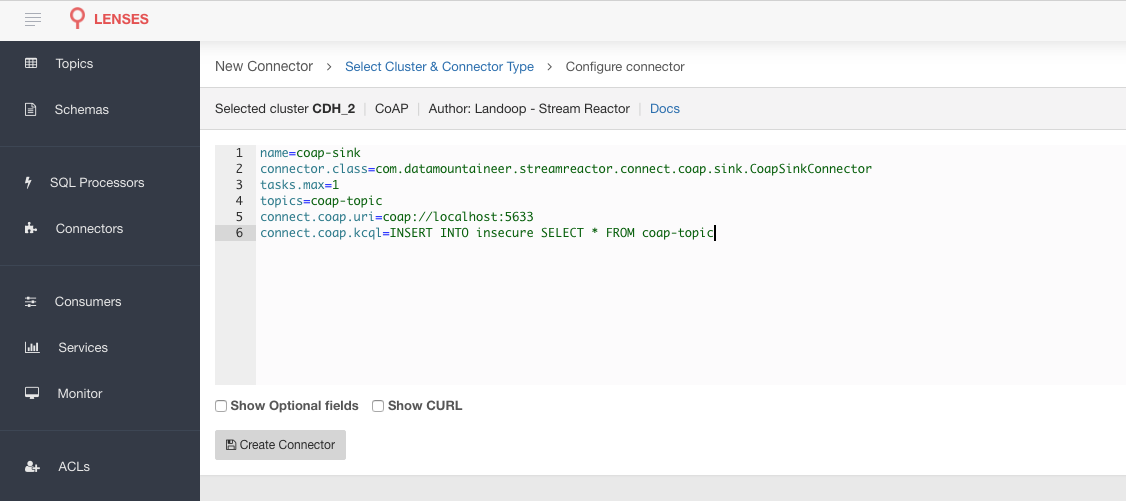

Lenses QuickStart¶

The easiest way to try out this is using Lenses Box the pre-configured docker, that comes with this connector pre-installed. You would need to Connectors –> New Connector –> Sink –> CoAP and paste your configuration

CoAP Setup¶

The connector uses Californium Java API under the hood. Copper, a FireFox browser addon is available so you can browse the server and resources.

We will use a simple CoAP test server we have developed for testing. Download the CoAP test server from our github release page and start the server in a new terminal tab.

mkdir coap_server

cd coap_server

wget https://github.com/datamountaineer/coap-test-server/releases/download/v1.0/start-server.sh

chmod +x start-server.sh

./start-server.sh

You will see the server start listening on port 5864 for secure DTLS connections and on port 5633 for insecure connections.

m.DTLSConnector$Worker.java:-1) run() in thread DTLS-Receiver-0.0.0.0/0.0.0.0:5634 at (2017-01-10 15:41:08)

1 INFO [CoapEndpoint]: Starting endpoint at localhost/127.0.0.1:5633 - (org.eclipse.californium.core.network.CoapEndpoint.java:192) start() in thread main at (2017-01-10 15:41:08)

1 CONFIG [UDPConnector]: UDPConnector starts up 1 sender threads and 1 receiver threads - (org.eclipse.californium.elements.UDPConnector.java:261) start() in thread main at (2017-01-10 15:41:08)

1 CONFIG [UDPConnector]: UDPConnector listening on /127.0.0.1:5633, recv buf = 65507, send buf = 65507, recv packet size = 2048 - (org.eclipse.californium.elements.UDPConnector.java:261) start() in thread main at (2017-01-10 15:41:08)

Secure CoAP server powered by Scandium (Sc) is listening on port 5634

UnSecure CoAP server powered by Scandium (Sc) is listening on port 5633

Installing the Connector¶

Connect, in production should be run in distributed mode

- Install and configure a Kafka Connect cluster

- Create a folder on each server called

plugins/lib - Copy into the above folder the required connector jars from the stream reactor download

- Edit

connect-avro-distributed.propertiesin theetc/schema-registryfolder and uncomment theplugin.pathoption. Set it to the root directory i.e. plugins you deployed the stream reactor connector jars in step 2. - Start Connect,

bin/connect-distributed etc/schema-registry/connect-avro-distributed.properties

Connect Workers are long running processes so set an init.d or systemctl service accordingly.

Starting the Connector¶

Download, and install Stream Reactor to your Kafka Connect cluster. Follow the instructions here if you haven’t already done so. All paths in the quickstart are based on the location you installed Stream Reactor.

Once the Connect has started we can now use the kafka-connect-tools cli to post in our distributed properties file for CoAP. If you are using the dockers you will have to set the following environment variable too for the CLI to connect to the Kafka Connect Rest API.

export KAFKA_CONNECT_REST="http://myserver:myport"

➜ bin/connect-cli create coap-source < conf/coap-source.properties

name=coap-sink

connector.class=com.datamountaineer.streamreactor.connect.coap.sink.CoapSinkConnector

tasks.max=1

topics=coap_topic

connect.coap.uri=coap://localhost:5683

connect.coap.kcql=INSERT INTO unsecure SELECT * FROM coap_topic

If you switch back to the terminal you started Kafka Connect in you should see the Cassandra Sink being accepted and the task starting.

We can use the CLI to check if the connector is up but you should be able to see this in logs as well.

#check for running connectors with the CLI

➜ bin/connect-cli ps

coap-sink

INFO

__ __

/ / ____ _____ ____/ /___ ____ ____

/ / / __ `/ __ \/ __ / __ \/ __ \/ __ \

/ /___/ /_/ / / / / /_/ / /_/ / /_/ / /_/ /

/_____/\__,_/_/ /_/\__,_/\____/\____/ .___/

/_/

______ _____ _ __

/ ____/___ ____ _____ / ___/(_)___ / /__ By Andrew Stevenson

/ / / __ \/ __ `/ __ \\__ \/ / __ \/ //_/

/ /___/ /_/ / /_/ / /_/ /__/ / / / / / ,<

\____/\____/\__,_/ .___/____/_/_/ /_/_/|_|

/_/ (com.datamountaineer.streamreactor.connect.coap.sink.CoapSinkTask:52)

Test Records¶

Tip

If your input topic doesn’t match the target use Lenses SQL to transform in real-time the input, no Java or Scala required!

Now we need to put some records it to the coap_topic topics. We can use the kafka-avro-console-producer to do this.

Start the producer and pass in a schema to register in the Schema Registry. The schema has a firstname field of type

string, a lastname field of type string, an age field of type int and a salary field of type double.

bin/kafka-avro-console-producer \

--broker-list localhost:9092 --topic coap-topic \

--property value.schema='{"type":"record","name":"User",

"fields":[{"name":"firstName","type":"string"},{"name":"lastName","type":"string"},{"name":"age","type":"int"},{"name":"salary","type":"double"}]}'

Now the producer is waiting for input. Paste in the following:

{"firstName": "John", "lastName": "Smith", "age":30, "salary": 4830}

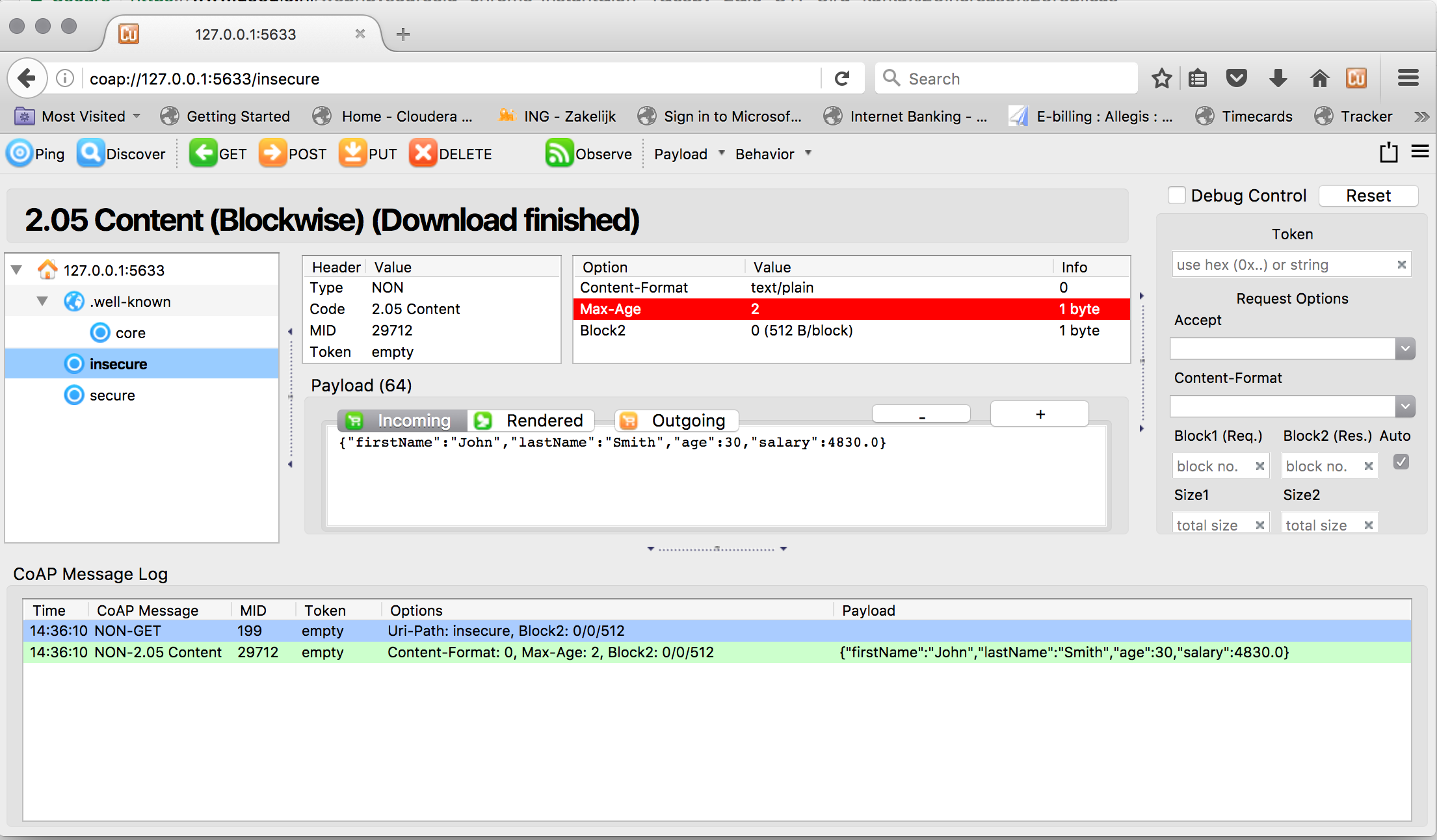

Check for Records in the CoAP server via Copper¶

Now check the logs of the connector you should see this:

[2017-01-10 13:47:36,525] INFO Delivered 1 records for coap-topic. (com.datamountaineer.streamreactor.connect.coap.sink.CoapSinkTask:47)

In Firefox go the following URL. If you have not installed Copper do so here .

coap://127.0.0.1:5633/insecure

Hit the get button and the records will be displayed in the bottom panel.

Configurations¶

| Config | Description | Type | Value |

|---|---|---|---|

name |

Name of the connector | string | This must be unique across the Connect cluster |

topics |

The topics to sink.

The connector will check this matchs the KCQL statement

|

string | |

tasks.max |

The number of tasks to scale output | int | 1 |

connector.class |

Name of the connector class | string | com.datamountaineer.streamreactor.connect.coap.sink.CoapSinkConnector |

Connector Configurations¶

| Config | Description | Type |

|---|---|---|

connect.coap.uri |

Uri of the CoAP server | string |

connect.coap.kcql |

The KCQL statement to select and

route resources to topics

|

string |

Optional Configurations¶

| Config | Description | Type | Default |

|---|---|---|---|

connect.coap.port |

The port the DTLS connector will bind to

on the Connector host

|

int | 0 |

connect.coap.host |

The hostname the DTLS connector will

bind to on the Connector host

|

string | localhost |

connect.coap.username |

CoAP PSK identity | string | |

connect.coap.password |

CoAP PSK secret | string | |

connect.coap.public.key.file |

Path to the public key

file for use in with PSK credentials

|

string | |

connect.coap.private.key.file |

Path to the private

key file for use in with PSK credentials in PKCS8 rather than PKCS1

Use open SSL to convert.

openssl pkcs8 -in privatekey.pem -topk8 -nocrypt -out privatekey-pkcs8.pem

Only cipher suites TLS_PSK_WITH_AES_128_CCM_8

and TLS_PSK_WITH_AES_128_CBC_SHA256

are currently supported

|

string | |

connect.coap.keystore.pass |

The password of the key store | string | |

connect.coap.keystore.path |

The path to the keystore | string | |

connect.coap.truststore.pass |

The password of the trust store | string | |

connect.coap.truststore.path |

The path to the truststore | string | |

connect.coap.certs |

The certificates to load from the trust store | string | |

connect.coap.cert.chain.key |

The key to use to get the certificate chain | string | client |

connect.coap.error.policy |

Specifies the action to be

taken if an error occurs while inserting the data.

There are three available options, NOOP, the error

is swallowed, THROW, the error is allowed

to propagate and retry.

For RETRY the Kafka message is redelivered up

to a maximum number of times specified by the

connect.coap.max.retries option |

string | THROW |

connect.coap.max.retries |

The maximum number of times a message

is retried. Only valid when the

connect.coap.error.policy is set to RETRY |

string | 10 |

connect.coap.retry.interval |

The interval, in milliseconds between retries,

if the sink is using

connect.coap.error.policy set to RETRY |

string | 60000 |

connect.progress.enabled |

Enables the output for how many

records have been processed

|

boolean | false |

Example¶

name=coap-sink

connector.class=com.datamountaineer.streamreactor.connect.coap.sink.CoapSinkConnector

tasks.max=1

topics=coap-topic

connect.coap.uri=coap://localhost:5633

connect.coap.kcql=INSERT INTO insecure SELECT * FROM coap-topic

connect.progress.enabled=true

Kubernetes¶

Helm Charts are provided at our repo, add the repo to your Helm instance and install. We recommend using the Landscaper to manage Helm Values since typically each Connector instance has its own deployment.

Add the Helm charts to your Helm instance:

helm repo add landoop https://landoop.github.io/kafka-helm-charts/

TroubleShooting¶

Please review the FAQs and join our slack channel