AWS¶

Lenses can be deployed in AWS with your own Apache Kafka or Amazon Managed Streaming for Apache Kafka (Amazon MSK) cluster with a CloudFormation template and it is available in AWS Marketplace. Based on Hardware & OS requirements for Lenses, we recommend to start with t2.large instances or any other instance type with >= 7GB.

When the deployment is done you can login to Lenses using the following credentials:

username: admin password: <ec2-instance-ID>

Marketplace¶

- AWS MSK

Lenses will be installed in the EC2 instance from the

.tar.gzLenses Linux distribution and will expose inbound traffic only for the port which you provided during the template deployment.This template creates its own IAM profile and installs the AWS Log agent in EC2 instance. It is used to enable CloudWatch logging to be able to check all the available logs for the AWS Stack created for Lenses. Apart from this Lenses IAM profile has the ability to get MSK connection details and get and issue certificate for AWS Certificate Manager Private Certificate Authority. We need these permissions in order to be able to autodetect the MSK boostrap brokers and MSK Zookeeper nodes and in parallel support TLS encrypted traffic and configure Lenses in advanced. More specifically the template enables these policies:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

- kafka:Describe*

- kafka:List*

- logs:PutLogEvents

- acm-pca:IssueCertificate

- acm-pca:GetCertificate

Required fields

Field Description Type Required LensesLicenseThe Lenses license string yes MSKClusterARNThe generated MSK Cluster ARNstring yes MSKSecurityGroupThe MSK security group in order toallow all traffic as an inbound traffic to Lensesstring yes Optional fields

Field Description Type Required SchemaRegistryURLsA list of Schema Registry nodes array no ConnectURLsA list of all the Kafka Connect clusters array no - Archive

Lenses will be installed in the EC2 instance from the

.tar.gzLenses Linux distribution and will expose inbound traffic only for the port which you provided during the template deployment.This template creates its own IAM profile and installs the AWS Log agent in EC2 instance. It is used to enable CloudWatch logging to be able to check all the available logs for the AWS Stack created for Lenses. More specifically the template enables these policies:

- logs:CreateLogGroup

- logs:CreateLogStream

- logs:PutLogEvents

Required fields

Field Description Type Required Lenses LicenseThe Lenses license string yes BrokerURLsThe connection string for Kafka brokerseg. PLAINTEXT://broker.1.url:9092,PLAINTEXT://broker.2.url:9092.string yes Optional fields

Field Description Type Required ZookeeperURLsA list of all the zookeeper nodes array no SchemaRegistryURLsA list of Schema Registry nodes array no ConnectURLsA list of all the Kafka Connect clusters array no

Deployment¶

- AWS MSK

You can deploy Lenses using AWS MSK with one of the marketplace templates. In order Lenses and AWS MSK be able to communicate you need manually to do the following:

MSK VPC Security Group

- Copy the Security Group ID of Lenses during CloudFormation deployment

- Open the Amazon VPC console

- In the navigation pane, choose Security Groups. In the table of security groups, find the security group which belongs to the VPC of your deployed AWS MSK. Choose the group by selecting the checkbox in the first column.

- In the Inbound Rules tab, choose Edit rules and Choose Add Rule.

- In the new rule, choose All traffic in the Type column. In the second field in the Source column, enter the ID of the security group of Lenses which you copied at first step. Choose Save rules.

This enables Lenses EC2 instance to communicate back and forth with your MSK cluster as this is the recommended configuration for AWS MSK here.

- Common VPC

- You can deploy Lenses in the same VPC Apache Kafka infrastructure and you can use the provided IPs for Brokers, Zookeeper, Schema Registry and Connect.

- VPC-to-VPC Peering

- You can deploy Lenses in a different VPC by your Apache Kafka infrastructure and with peering the two different VPCs, Lenses can communicate with Brokers, Zookeeper, Schema Registry and Connect.

MSK - Prometheus Metrics¶

Amazon Managed Streaming for Apache Kafka (Amazon MSK) exposes JMX metrics through Open Monitoring with Prometheus endpoints. Lenses supports the Prometheus integration with the following configuration in lenses.conf:

lenses.kafka.metrics = {

type: "AWS",

port: [

{id: <broker-id-1>, url:"http://b-1.<broker.1.endpoint>:11001/metrics"},

{id: <broker-id-2>, url:"http://b-2.<broker.2.endpoint>:11001/metrics"},

{id: <broker-id-3>, url:"http://b-3.<broker.1.endpoint>:11001/metrics"}

]

}

In order to fetch the Brokers IDs and the prometheus endpoints you need to use AWS CLI with the following command:

aws kafka list-nodes --cluster-arn <your-msk-cluster-arn>

An example of the response will be like this and will include all the info you need:

{

"NodeInfoList": [

{

"AddedToClusterTime": "2019-11-25T11:11:26.324Z",

"BrokerNodeInfo": {

"AttachedENIId": "eni-<your-id>",

"BrokerId": "2",

"ClientSubnet": "<your-vpc-subnet-it>",

"ClientVpcIpAddress": "<your-private-vpc-ip-address>",

"CurrentBrokerSoftwareInfo": {

"KafkaVersion": "2.2.1"

},

"Endpoints": [

"b-2.<your-msk-cluster-name>.<random-id>.c5.kafka.<region>.amazonaws.com"

]

},

"InstanceType": "m5.large",

"NodeARN": "<your-msk-cluster-arn>",

"NodeType": "BROKER"

},

# Same response for the rest of the nodes

]

}

Service Discovery¶

Setup for brokers, zookeeper nodes, schema registries and one

connect distributed cluster without JMX and everything (ports, connect topics,

protocol) left at default values. Lenses VM should have the IAM permission

ec2:DescribeInstances. The Schema Registry runs in the same instances as

Connect. This example would work if you used Confluent’s AWS templates

to deploy your cluster.

SD_CONFIG=provider=aws region=eu-central-1 addr_type=public_v4

SD_BROKER_FILTER=tag_key=Name tag_value=*broker*

SD_ZOOKEEPER_FILTER=tag_key=Name tag_value=*zookeeper*

SD_REGISTRY_FILTER=tag_key=Name tag_value=*worker*

SD_CONNECT_FILTERS=tag_key=Name tag_value=*worker*

If you use our Docker or Reference Architecture CloudFormation there will be fields which you can fill for your specific named tags.

Kubernetes with Helm¶

- Nginx Controller

Lenses can be deployed with Nginx Ingress Controller in AWS with the following commands and additions in provided values for Lenses helm chart.

# Create Policy for IAM Role curl https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/iam-policy.json -O aws iam create-policy --policy-name <YOUR-POLICY-NAME> --policy-document file://iam-policy.json aws iam attach-user-policy --user-name <YOUR-USER-NAME> --policy-arn `CREATED-POLICY-ARN` # Install NGINX Ingress controller helm repo add incubator https://kubernetes-charts-incubator.storage.googleapis.com/ helm install stable/nginx-ingress --name <RELEASE-NAME> # This will return the generated URL for Nginx Load Balancer kubectl --namespace <THE-NAMESPACE-YOU-USED-TO-DEPLOY> get services -o wide -w <RELEASE-NAME>

When you run the above commands the NGINX ingress controller will be created.

restPort: 3030 servicePort: 3030 service: enabled: true type: ClusterIP annotations: {} ingress: enabled: true host: <GENERATED-LB-NGINX-URL> annotations: kubernetes.io/ingress.class: nginx

- Traefik Controller

Lenses can be deployed with Traefik Ingress Controller in AWS with the following commands and additions in provided values for Lenses helm chart.

# Create Policy for IAM Role curl https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/iam-policy.json -O aws iam create-policy --policy-name <YOUR-POLICY-NAME> --policy-document file://iam-policy.json aws iam attach-user-policy --user-name <YOUR-USER-NAME> --policy-arn `CREATED-POLICY-ARN` # Install NGINX Ingress controller helm repo add incubator https://kubernetes-charts-incubator.storage.googleapis.com/ helm install stable/traefik --name <RELEASE-NAME> # Traefik's load balancer state kubectl get svc <RELEASE-NAME>-traefik -w # Once 'EXTERNAL-IP' is no longer '<pending>': kubectl describe svc <RELEASE-NAME>-traefik | grep Ingress | awk '{print $3}'

restPort: 3030 servicePort: 3030 service: enabled: true type: ClusterIP annotations: {} ingress: enabled: true host: <GENERATED-LB-TRAEFIK-URL> annotations: kubernetes.io/ingress.class: traefik

Note

- If you receive an error with the service account which you will use (eg. default) about the followings:

- Failed to list v1.Endpoints: endpoints is forbidden: User

- Failed to list v1.Service: services is forbidden: User

Then you need to bind your service account with role cluster-admin with the following RBAC YAML:

apiVersion: v1 kind: ServiceAccount metadata: name: tiller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: tiller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: tiller namespace: kube-system

- Application Load Balancer

Lenses can be deployed with AWS Application Load Balancer with the following commands and additions in provided values for Lenses helm chart. First, you need to attach the following IAM Policies to the EKS Node Instance IAM Role you will use to deploy ALB ingress controller.

# Create Policy for IAM Role curl https://raw.githubusercontent.com/kubernetes-sigs/aws-alb-ingress-controller/v1.0.0/docs/examples/iam-policy.json -O aws iam create-policy --policy-name <YOUR-POLICY-NAME> --policy-document file://iam-policy.json aws iam attach-user-policy --user-name <YOUR-USER-NAME> --policy-arn `CREATED-POLICY-ARN` # Install ALB Ingress controller helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator helm install incubator/aws-alb-ingress-controller --set clusterName=<EKS-CLUSTER-NAME> --set awsRegion=<YOUR-REGION> --set awsVpcID=<YOUR-VPC-ID> --name <RELEASE-NAME>

When you run the above commands the ALB ingress controller will not be created till you deploy Lenses which includes an ingress configuration for ALB. You need to add the following options:

restPort: 3030 servicePort: 3030 service: enabled: true type: ClusterIP annotations: {} ingress: enabled: true host: annotations: kubernetes.io/ingress.class: alb alb.ingress.kubernetes.io/subnets: <SUBNETS-VPC-OF-DEPLOYED-ALB> alb.ingress.kubernetes.io/scheme: internet-facing alb.ingress.kubernetes.io/target-type: ip

Then check the Load balancer DNS FQDN with:

kubectl get ingress -o wide -w

If you specify a host for ingress, you need to add ALB address to Route53 to be able to access it externally. Or, deploy external DNS to manage Route53 records automatically, which is also recommended.

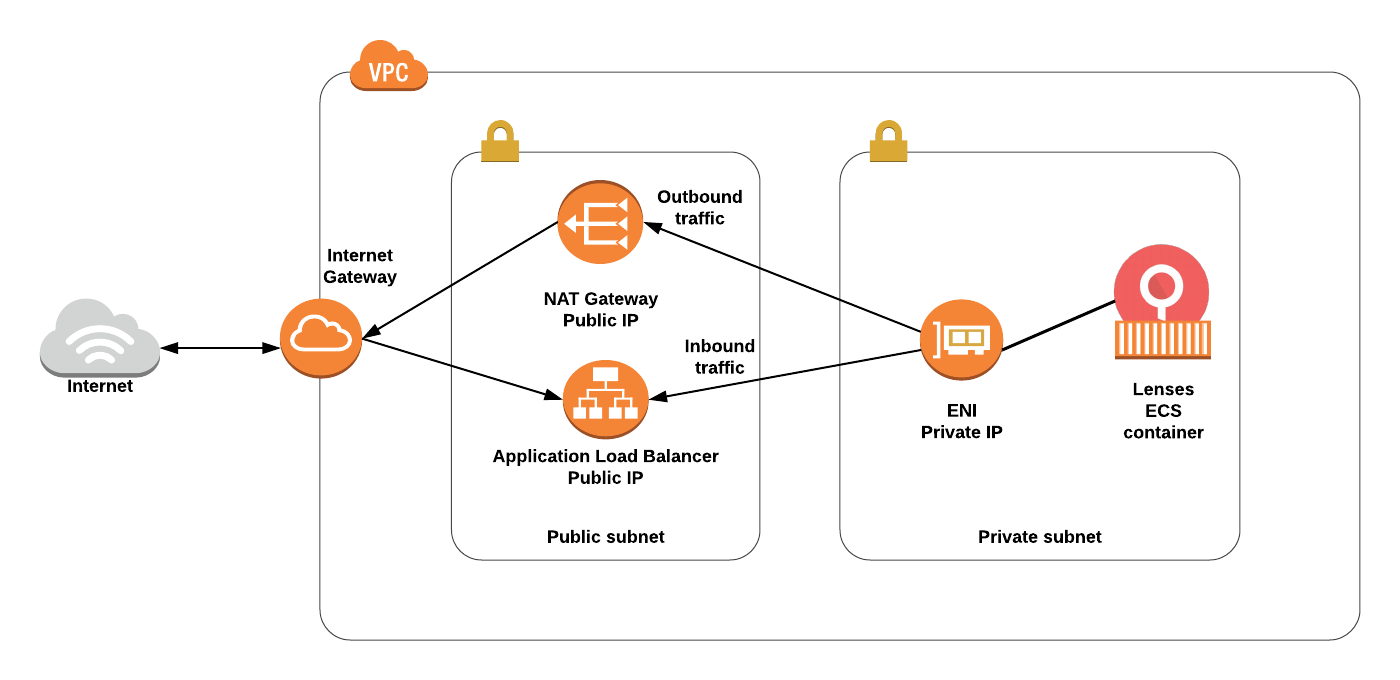

ECS Fargate¶

Lenses will be installed in Elastic Container Service (ECS) with AWS Fargate compute engine that allows you to run containers without having to manage servers or clusters.

Lenses AWS ECS reference architecture

Lenses AWS ECS reference architecture