MongoDB Sink¶

Download connector MongoDB Connector for Kafka 2.1.0

The MongoDB Sink allows you to write events from Kafka to your MongoDB instance. The connector converts the value from the Kafka Connect SinkRecords to a MongoDB Document and will do an insert or upsert depending on the configuration you chose. It is expected the database is created upfront; the targeted MongoDB collections will be created if they don’t exist.

Note

The database needs to be created upfront!

Prerequisites¶

- Apache Kafka 0.11.x of above

- Kafka Connect 0.11.x or above

- MongoDB 3.2.10 or above

- Java 1.8

Features¶

- The KCQL routing querying - Topic to collections mapping and Field selection

- Error policies

- Payload support for Schema.Struct and payload Struct, Schema.String and JSON payload and JSON payload with no schema

- TLS/SSL support

- Authentication - X.509, LDAP Plain, Kerberos (GSSAPI), MongoDB-CR and SCRAM-SHA-1.

KCQL Support¶

{ INSERT | UPSERT } INTO collection_name SELECT { FIELD, ... } FROM kafka_topic_name

Tip

You can specify multiple KCQL statements separated by ; to have a the connector sink multiple topics.

The MongoDB sink supports KCQL, Kafka Connect Query Language. The following support KCQL is available:

- Field selection

- Target collection selection

- Insert and upset modes.

Insert Mode¶

Insert is the default write mode of the sink.

Kafka currently provides at least once delivery semantics. Therefore, this mode may produce errors if unique constraints have been implemented on the target tables. If the error policy has been set to NOOP then the Sink will discard the error and continue to process, however, it currently makes no attempt to distinguish violation of integrity constraints from other exceptions such as casting issues.

Upsert Mode¶

The Sink supports MongoDB upsert functionality which replaces the existing row if a match is found on the primary keys.

Kafka currently provides at least once delivery semantics and order is a guaranteed within partitions. This mode will, if the same record is delivered twice to the sink, result in an idempotent write. The existing record will be updated with the values of the second which are the same.

If records are delivered with the same field or group of fields that are used as the primary key on the target table, but different values, the existing record in the target table will be updated.

Since records are delivered in the order they were written per partition the write is idempotent on failure or restart. Redelivery produces the same result.

Examples:

-- Select all fields from topic fx_prices and insert into the fx collection

INSERT INTO fx SELECT * FROM fx_prices

-- Select all fields from topic fx_prices and upsert into the fx collection, The assumption is there will be a ticker field in the incoming json:

UPSERT INTO fx SELECT * FROM fx_prices PK ticker

-- Select specific fields from the topic sample_topic and insert into the sample collection:

INSERT INTO sample SELECT field1,field2,field3 FROM sample_topic

-- Select specific fields from the topic sample_topic and upsert into the sample collection:

UPSERT INTO sample SELECT field1,field2,field3 FROM sample_fopic PK field1

-- Rename some fields while selecting all from the topic sample_topic and insert into the sample collection:

INSERT INTO sample SELECT *, field1 as new_name1,field2 as new_name2 FROM sample_topic

-- Rename some fields while selecting all from the topic sample_topic and upsert into the sample collection:

UPSERT INTO sample SELECT *, field1 as new_name1,field2 as new_name2 FROM sample_topic PK new_name1

-- Select specific fields and rename some of them from the topic sample_topic and insert into the sample collection:

INSERT INTO sample SELECT field1 as new_name1,field2, field3 as new_name3 FROM sample_topic

-- Select specific fields and rename some of them from the topic sample_topic and upsert into the sample collection:

INSERT INTO sample SELECT field1 as new_name1,field2, field3 as new_name3 FROM sample_fopic PK new_name3

Payload Support¶

Schema.Struct and a Struct Payload¶

If you follow the best practice while producing the events, each message should carry its schema information. The best option is to send AVRO. Your Connector configurations options include:

key.converter=io.confluent.connect.avro.AvroConverter

key.converter.schema.registry.url=http://localhost:8081

value.converter=io.confluent.connect.avro.AvroConverter

value.converter.schema.registry.url=http://localhost:8081

This requires the SchemaRegistry.

Note

This needs to be done in the connect worker properties if using Kafka versions prior to 0.11

Schema.String and a JSON Payload¶

Sometimes the producer would find it easier to just send a message with

Schema.String and a JSON string. In this case your connector configuration should be set to value.converter=org.apache.kafka.connect.json.JsonConverter.

This doesn’t require the SchemaRegistry.

key.converter=org.apache.kafka.connect.json.JsonConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

Note

This needs to be done in the connect worker properties if using Kafka versions prior to 0.11

No schema and a JSON Payload¶

There are many existing systems which are publishing JSON over Kafka and bringing them in line with best practices is quite a challenge, hence we added the support. To enable this support you must change the converters in the connector configuration.

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=false

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable=false

Note

This needs to be done in the connect worker properties if using Kafka versions prior to 0.11

Error Polices¶

Landoop sink connectors support error polices. These error polices allow you to control the behavior of the sink if it encounters an error when writing records to the target system. Since Kafka retains the records, subject to the configured retention policy of the topic, the sink can ignore the error, fail the connector or attempt redelivery.

Throw

Any error on write to the target system will be propagated up and processing is stopped. This is the default behavior.

Noop

Any error on write to the target database is ignored and processing continues.

Warning

This can lead to missed errors if you don’t have adequate monitoring. Data is not lost as it’s still in Kafka subject to Kafka’s retention policy. The sink currently does not distinguish between integrity constraint violations and or other exceptions thrown by any drivers or target system.

Retry

Any error on write to the target system causes the RetryIterable exception to be thrown. This causes the Kafka Connect framework to pause and replay the message. Offsets are not committed. For example, if the table is offline it will cause a write failure, the message can be replayed. With the Retry policy, the issue can be fixed without stopping the sink.

TLS/SSL¶

TLS/SSL is support by setting ?ssl=true in the connect.mongo.connection option. The MongoDB driver will then

load attempt to load the truststore and keystore using the JVM system properties.

You will need to set several JVM system properties to ensure that the client is able to validate the SSL certificate presented by the server:

javax.net.ssl.trustStore: the path to a trust store containing the certificate of the signing authority

javax.net.ssl.trustStorePassword: the password to access this trust store

The trust store is typically created with the keytool command line program provided as part of the JDK. For example:

keytool -importcert -trustcacerts -file <path to certificate authority file> -keystore <path to trust store> -storepass <password>

You will also need to set several JVM system properties to ensure that the client presents an SSL certificate to the MongoDB server:

javax.net.ssl.keyStore: the path to a key store containing the client’s SSL certificates

javax.net.ssl.keyStorePassword: the password to access this key store

The key store is typically created with the keytool or the openssl command line program.

Authentication Mechanism¶

All authentication methods are supported, X.509, LDAP Plain, Kerberos (GSSAPI), MongoDB-CR and SCRAM-SHA-1. The default as of

MongoDB version 3.0 SCRAM-SHA-1. To set the authentication mechanism set the authMechanism in the connect.mongo.connection option.

Note

The mechanism can either be set in the connection string but this requires the password to be in plain text in the connection string

or via the connect.mongo.auth.mechanism option.

If the username is set it overrides the username/password set in the connection string and the connect.mongo.auth.mechanism has precedence.

e.g.

# default of scram

mongodb://host1/?authSource=db1

# scram explict

mongodb://host1/?authSource=db1&authMechanism=SCRAM-SHA-1

# mongo-cr

mongodb://host1/?authSource=db1&authMechanism=MONGODB-CR

# x.509

mongodb://host1/?authSource=db1&authMechanism=MONGODB-X509

# kerberos

mongodb://host1/?authSource=db1&authMechanism=GSSAPI

# ldap

mongodb://host1/?authSource=db1&authMechanism=PLAIN

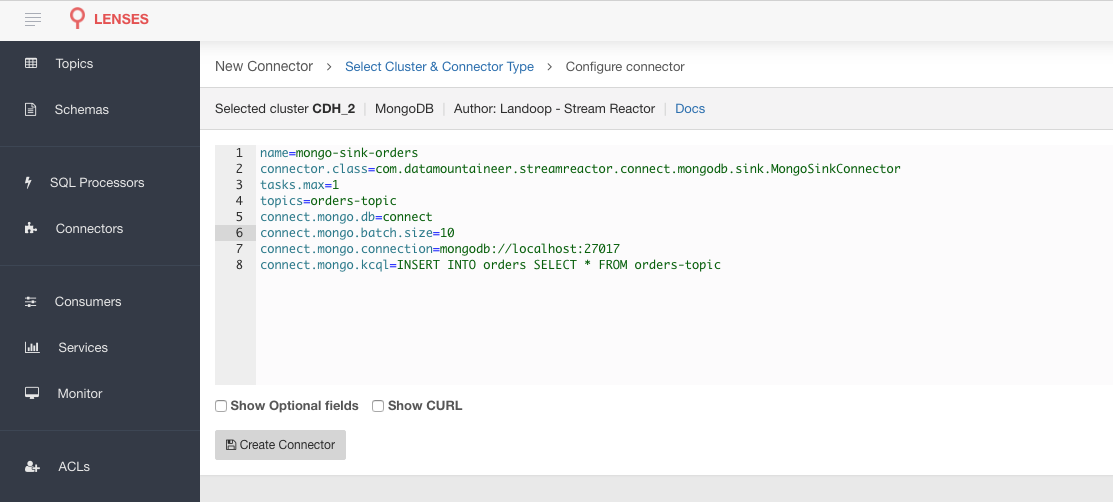

Lenses QuickStart¶

The easiest way to try out this is using Lenses Box the pre-configured docker, that comes with this connector pre-installed. You would need to Connectors –> New Connector –> Sink –> MongoDB and paste your configuration

MongoDB Setup¶

If you already have an instance of MongoDB running you can skip this step. First, download and install MongoDB Community edition. This is the manual approach for installing on Ubuntu. You can follow the details https://docs.mongodb.com/v3.2/administration/install-community/ for your OS.

#go to home folder

➜ cd ~

#make a folder for mongo

➜ mkdir mongodb

#Download MongoDB

➜ wget wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-ubuntu1604-3.2.10.tgz

#extract the archive

➜ tar xvf mongodb-linux-x86_64-ubuntu1604-3.2.10.tgz -C mongodb

➜ cd mongodb

➜ mv mongodb-linux-x86_64-ubuntu1604-3.2.10/* .

#create the data folder

➜ mkdir data

➜ mkdir data/db

#Start MongoDB

➜ bin/mongod --dbpath data/db

Installing the Connector¶

Connect, in production should be run in distributed mode

- Install and configure a Kafka Connect cluster

- Create a folder on each server called

plugins/lib - Copy into the above folder the required connector jars from the stream reactor download

- Edit

connect-avro-distributed.propertiesin theetc/schema-registryfolder and uncomment theplugin.pathoption. Set it to the root directory i.e. plugins you deployed the stream reactor connector jars in step 2. - Start Connect,

bin/connect-distributed etc/schema-registry/connect-avro-distributed.properties

Connect Workers are long running processes so set an init.d or systemctl service accordingly.

Sink Connector QuickStart¶

Start Kafka Connect in distributed mode (see install).

In this mode a Rest Endpoint on port 8083 is exposed to accept connector configurations.

We developed Command Line Interface to make interacting with the Connect Rest API easier. The CLI can be found in the Stream Reactor download under

the bin folder. Alternatively the Jar can be pulled from our GitHub

releases page.

Test Database¶

The Sink requires that the database already exists in MongoDB.

#from a new terminal

➜ cd ~/mongodb/bin

#start the cli

➜ ./mongo

#list all dbs

➜ show dbs

#create a new database named connect

➜ use connect

#create a dummy collection and insert one document to actually create the database

➜ db.dummy.insert({"name":"Kafka Rulz!"})

#list all dbs

➜ show dbs

Starting the Connector¶

Download, and install Stream Reactor in Kafka Connect. Follow the instructions here if you have not already done so. All paths in the quick start are based on the location you installed the Stream Reactor.

Once the Connect has started we can now use the kafka-connect-tools cli to post in our distributed properties file for Kudu. For the CLI to work including when using the dockers you will have to set the following environment variable to point the Kafka Connect Rest API.

export KAFKA_CONNECT_REST="http://myserver:myport"

➜ bin/connect-cli create mongo-sink < conf/source.kcql/mongo-sink.properties

name=mongo-sink-orders

connector.class=com.datamountaineer.streamreactor.connect.mongodb.sink.MongoSinkConnector

tasks.max=1

topics=orders-topic

connect.mongo.kcql=INSERT INTO orders SELECT * FROM orders-topic

connect.mongo.db=connect

connect.mongo.connection=mongodb://localhost:27017

connect.mongo.batch.size=10

If you switch back to the terminal you started Kafka Connect in you should see the MongoDB Sink being accepted and the task starting.

We can use the CLI to check if the connector is up but you should be able to see this in logs as well.

#check for running connectors with the CLI

➜ bin/connect-cli ps

mongo-sink

INFO

__ __

/ / ____ _____ ____/ /___ ____ ____

/ / / __ `/ __ \/ __ / __ \/ __ \/ __ \

/ /___/ /_/ / / / / /_/ / /_/ / /_/ / /_/ /

/_____/\__,_/_/ /_/\__,_/\____/\____/ .___/

/_/

__ __ ____ _ ____ _ _ by Stefan Bocutiu

| \/ | ___ _ __ __ _ ___ | _ \| |__ / ___|(_)_ __ | | __

| |\/| |/ _ \| '_ \ / _` |/ _ \| | | | '_ \ \___ \| | '_ \| |/ /

| | | | (_) | | | | (_| | (_) | |_| | |_) | ___) | | | | | <

|_| |_|\___/|_| |_|\__, |\___/|____/|_.__/ |____/|_|_| |_|_|\_\

. (com.datamountaineer.streamreactor.connect.mongodb.sink.MongoSinkTask:51)

INFO Initialising Mongo writer.Connection to mongodb://localhost:27017 (com.datamountaineer.streamreactor.connect.mongodb.sink.MongoWriter$:126)

Test Records¶

Tip

If your input topic doesn’t match the target use Lenses SQL to transform in real-time the input, no Java or Scala required!

Now we need to put some records it to the orders-topic. We can use the kafka-avro-console-producer to do this.

Start the producer and pass in a schema to register in the Schema Registry. The schema matches the table created earlier.

bin/kafka-avro-console-producer \

--broker-list localhost:9092 --topic orders-topic \

--property value.schema='{"type":"record","name":"myrecord","fields":[{"name":"id","type":"int"},

{"name":"created", "type": "string"}, {"name":"product", "type": "string"}, {"name":"price", "type": "double"}]}'

Now the producer is waiting for input. Paste in the following (each on a line separately):

{"id": 1, "created": "2016-05-06 13:53:00", "product": "OP-DAX-P-20150201-95.7", "price": 94.2}

{"id": 2, "created": "2016-05-06 13:54:00", "product": "OP-DAX-C-20150201-100", "price": 99.5}

{"id": 3, "created": "2016-05-06 13:55:00", "product": "FU-DATAMOUNTAINEER-20150201-100", "price": 10000}

{"id": 4, "created": "2016-05-06 13:56:00", "product": "FU-KOSPI-C-20150201-100", "price": 150}

Now if we check the logs of the connector we should see 2 records being inserted to MongoDB:

[2016-11-06 22:30:30,473] INFO Setting newly assigned partitions [orders-topic-0] for group connect-mongo-sink-orders (org.apache.kafka.clients.consumer.internals.ConsumerCoordinator:231)

[2016-11-06 22:31:29,328] INFO WorkerSinkTask{id=mongo-sink-orders-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSinkTask:261)

#Open a new terminal and navigate to the MongoDB installation folder

➜ ./bin/mongo

> show databases

connect 0.000GB

local 0.000GB

> use connect

switched to db connect

> show collections

dummy

orders

> db.orders.find()

{ "_id" : ObjectId("581fb21b09690a24b63b35bd"), "id" : 1, "created" : "2016-05-06 13:53:00", "product" : "OP-DAX-P-20150201-95.7", "price" : 94.2 }

{ "_id" : ObjectId("581fb2f809690a24b63b35c2"), "id" : 2, "created" : "2016-05-06 13:54:00", "product" : "OP-DAX-C-20150201-100", "price" : 99.5 }

{ "_id" : ObjectId("581fb2f809690a24b63b35c3"), "id" : 3, "created" : "2016-05-06 13:55:00", "product" : "FU-DATAMOUNTAINEER-20150201-100", "price" : 10000 }

{ "_id" : ObjectId("581fb2f809690a24b63b35c4"), "id" : 4, "created" : "2016-05-06 13:56:00", "product" : "FU-KOSPI-C-20150201-100", "price" : 150 }

Bingo, our 4 rows!

Legacy topics (plain text payload with a JSON string)¶

We have found some of the clients have already an infrastructure where they publish pure JSON on the topic and obviously the jump to follow the best practice and use schema registry is quite an ask. So we offer support for them as well.

This time we need to start the Connect with a different set of settings.

#create a new configuration for connect

➜ cp etc/schema-registry/connect-avro-distributed.properties etc/schema-registry/connect-avro-distributed-json.properties

➜ vi etc/schema-registry/connect-avro-distributed-json.properties

Replace the following 4 entries in the config

key.converter=io.confluent.connect.avro.AvroConverter

key.converter.schema.registry.url=http://localhost:8081

value.converter=io.confluent.connect.avro.AvroConverter

value.converter.schema.registry.url=http://localhost:8081

with the following

key.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=false

value.converter=org.apache.kafka.connect.json.JsonConverter

value.converter.schemas.enable=false

Now let’s restart the connect instance, and then use the CLI to remove the old MongoDB Sink:

➜ bin/connect-cli rm mongo-sink

and start the new Sink with the JSON properties files to read from a different topic with containing JSON records.

#start the connector for mongo

➜ bin/connect-cli create mongo-sink-orders-json < mongo-sink-orders-json.properties

View the Connect logs.

[2016-11-06 23:53:09,881] INFO MongoConfig values:

connect.mongo.retry.interval = 60000

connect.mongo.kcql = UPSERT INTO orders_json SELECT id, product as product_name, price as value FROM orders-topic-json PK id

connect.mongo.connection = mongodb://localhost:27017

connect.mongo.error.policy = THROW

connect.mongo.db = connect

connect.mongo.batch.size = 10

connect.mongo.max.retries = 20

(com.datamountaineer.streamreactor.connect.mongodb.config.MongoConfig:178)

[2016-11-06 23:53:09,927] INFO

____ _ __ __ _ _

| _ \ __ _| |_ __ _| \/ | ___ _ _ _ __ | |_ __ _(_)_ __ ___ ___ _ __

| | | |/ _` | __/ _` | |\/| |/ _ \| | | | '_ \| __/ _` | | '_ \ / _ \/ _ \ '__|

| |_| | (_| | || (_| | | | | (_) | |_| | | | | || (_| | | | | | __/ __/ |

|____/ \__,_|\__\__,_|_| |_|\___/ \__,_|_| |_|\__\__,_|_|_| |_|\___|\___|_|

__ __ ____ _ ____ _ _ by Stefan Bocutiu

| \/ | ___ _ __ __ _ ___ | _ \| |__ / ___|(_)_ __ | | __

| |\/| |/ _ \| '_ \ / _` |/ _ \| | | | '_ \ \___ \| | '_ \| |/ /

| | | | (_) | | | | (_| | (_) | |_| | |_) | ___) | | | | | <

|_| |_|\___/|_| |_|\__, |\___/|____/|_.__/ |____/|_|_| |_|_|\_\

. (com.datamountaineer.streamreactor.connect.mongodb.sink.MongoSinkTask:51)

[2016-11-06 23:53:10,270] INFO Initialising Mongo writer.Connection to mongodb://localhost:27017 (com.datamountaineer.streamreactor.connect.mongodb.sink.MongoWriter$:126)

Now it’s time to produce some records. This time we will use the simple kafka-consoler-consumer to put simple JSON on the topic:

➜ bin/kafka-console-producer --broker-list localhost:9092 --topic orders-topic-json

{"id": 1, "created": "2016-05-06 13:53:00", "product": "OP-DAX-P-20150201-95.7", "price": 94.2}

{"id": 2, "created": "2016-05-06 13:54:00", "product": "OP-DAX-C-20150201-100", "price": 99.5}

{"id": 3, "created": "2016-05-06 13:55:00", "product": "FU-DATAMOUNTAINEER-20150201-100", "price":10000}

Following the command you should have something similar to this in the logs for your connect:

[2016-11-07 00:08:30,200] INFO Setting newly assigned partitions [orders-topic-json-0] for group connect-mongo-sink-orders-json (org.apache.kafka.clients.consumer.internals.ConsumerCoordinator:231)

[2016-11-07 00:08:30,324] INFO Opened connection [connectionId{localValue:3, serverValue:9}] to localhost:27017 (org.mongodb.driver.connection:71)

Let’s check the MongoDB database for the new records:

#Open a new terminal and navigate to the MongoDB installation folder

➜ ./bin/mongo

> show databases

connect 0.000GB

local 0.000GB

> use connect

switched to db connect

> show collections

dummy

orders

orders_json

> db.orders_json.find()

{ "_id" : ObjectId("581fc5fe53b2c9318a3c1004"), "created" : "2016-05-06 13:53:00", "id" : NumberLong(1), "product_name" : "OP-DAX-P-20150201-95.7", "value" : 94.2 }

{ "_id" : ObjectId("581fc5fe53b2c9318a3c1005"), "created" : "2016-05-06 13:54:00", "id" : NumberLong(2), "product_name" : "OP-DAX-C-20150201-100", "value" : 99.5 }

{ "_id" : ObjectId("581fc5fe53b2c9318a3c1006"), "created" : "2016-05-06 13:55:00", "id" : NumberLong(3), "product_name" : "FU-DATAMOUNTAINEER-20150201-100", "value" : NumberLong(10000) }

Bingo, our 3 rows!

Configurations¶

The Kafka Connect framework requires the following in addition to any connectors specific configurations:

| Config | Description | Type | Value |

|---|---|---|---|

name |

Name of the connector | string | This must be unique across the Connect cluster |

topics |

The topics to sink.

The connector will check this matches the KCQL statement

|

string | |

tasks.max |

The number of tasks to scale output | int | 1 |

connector.class |

Name of the connector class | string | com.datamountaineer.streamreactor.connect.mongodb.sink.MongoSinkConnector |

Connector Configurations¶

Note

Setting username and password in the endpoints is not secure, they will be pass to Connect as plain text before being

given to the driver. Use the connect.mongo.username and connect.mongo.password options.

| Config | Description | Type |

|---|---|---|

connect.mongo.db |

The target MongoDB database name | string |

connect.mongo.connection |

The MongoDB endpoints connections in

the format

mongodb://host1[:port1][,host2[:port2],…[,hostN[:portN]]][/[database][?options]]

|

string |

connect.mongo.kcql |

Kafka connect query language expression

|

string |

Optional Configurations¶

| Config | Description | Type | Default |

|---|---|---|---|

connect.mongo.username |

The username to use for authentication.

If the username is set it overrides the username/password set in the connection

string and the

connect.mongo.auth.mechanism has precedence |

string | |

connect.mongo.password |

The password to use for authentication | string | |

connect.mongo.batch.size |

The number of records the sink would

push to mongo at once (improved performance)

|

int | 100 |

connect.mongo.auth.mechanism |

The mechanism to use for authentication.

GSSAPI (Kerberos), PLAIN (LDAP), X.509 or SCRAM-SHA-1

|

string | SCRAM-SHA-1 |

connect.mongo.error.policy |

Specifies the action to be

taken if an error occurs while inserting the data.

There are three available options, NOOP, the error

is swallowed, THROW, the error is allowed

to propagate and retry.

For RETRY the Kafka message is redelivered up

to a maximum number of times specified by the

connect.mongo.max.retries option |

string | THROW |

connect.mongo.max.retries |

The maximum number of times a message

is retried. Only valid when the

connect.mongo.error.policy is set to RETRY |

string | 10 |

connect.mongo.retry.interval |

The interval, in milliseconds between retries,

if the sink is using

connect.mongo.error.policy set to RETRY |

string | 60000 |

connect.progress.enabled |

Enables the output for how many

records have been processed

|

boolean | false |

Example¶

name=mongo-sink-orders

connector.class=com.datamountaineer.streamreactor.connect.mongodb.sink.MongoSinkConnector

tasks.max=1

topics=orders-topic

connect.mongo.db=connect

connect.mongo.batch.size=10

connect.mongo.connection=mongodb://localhost:27017

connect.mongo.kcql=INSERT INTO orders SELECT * FROM orders-topic

Schema Evolution¶

Upstream changes to schemas are handled by Schema registry which will validate the addition and removal or fields, data type changes and if defaults are set. The Schema Registry enforces AVRO schema evolution rules. More information can be found here.

Kubernetes¶

Helm Charts are provided at our repo, add the repo to your Helm instance and install. We recommend using the Landscaper to manage Helm Values since typically each Connector instance has its own deployment.

Add the Helm charts to your Helm instance:

helm repo add landoop https://landoop.github.io/kafka-helm-charts/

TroubleShooting¶

Please review the FAQs and join our slack channel